Artificial Intelligence in customer service adopting human biases

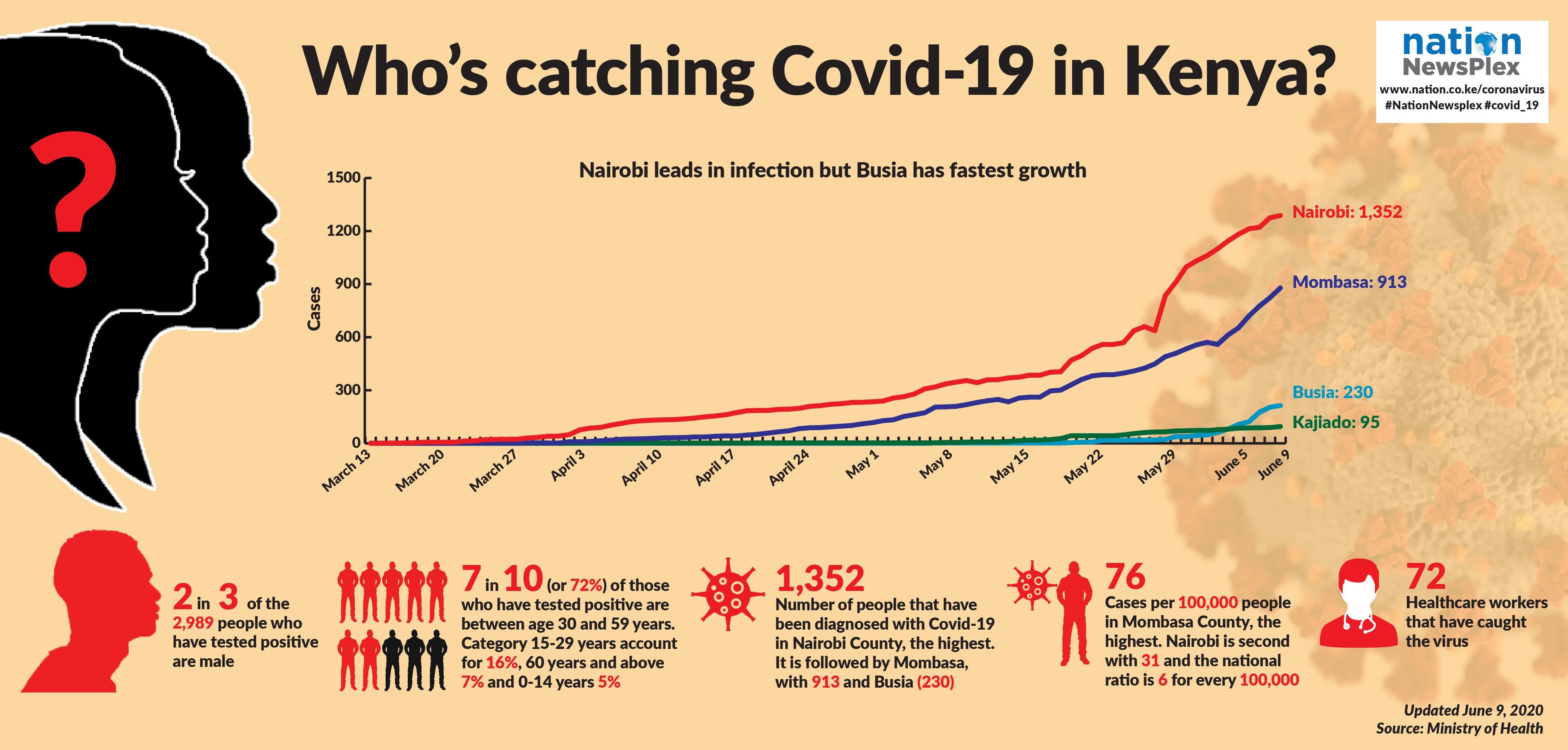

In 1983, renowned science fiction writer Isaac Asimov predicted how robots would influence society in 2019. “Jobs that will disappear will be just those routine clerical and assembly line jobs that are simple, repetitive and ‘stultifying’, and have, on the whole, continued to exist in huge numbers with the addition of computing technology,” he said. Artificial Intelligence (AI) is fast making this threat a reality to the once-lucrative trade of Business Process Outsourcing (BPO) that supports customer operations for many large firms.

Safaricom launched Zuri in 2018, a chatbot on Facebook Messenger and Telegram that aid customers in performing simple tasks such as unsubscribing from SMS services and reversing M-Pesa transactions. In the same year, Jubilee Insurance launched a chatbot christened Julie (Jubilee Live Intelligent Expert) to assist customers with everyday insurance queries. As these AI-powered customer bots become popular, a question at hand is what human form they should take or how AI bots should be humanised?

A Journey through Bias

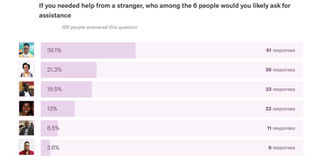

In order to ascertain the type of person people feel most comfortable asking questions, the writer setup a survey on different physical attributes. The survey captured six characteristics: gender, hair type, spectacles, skin tone and age. Respondents were asked two questions – the person they were most likely to ask for assistance and the one they were least likely, and the reasons. The image below shows the survey options.

Six options from which respondents picked those they are most likely and list likely to ask for help.

A total of 263 people responded to the survey on Twitter, Facebook and WhatsApp. Although the experiment lacked the elements of a proper scientific study (random sample), the results were equally exciting. Image A had the highest preference at a third (36 percent). It is followed by Image D at a fifth (22 percent) and Image B at 18 percent. It seemed a young lady, with short hair, fair skin and spectacles was the most approachable stranger to the survey participants. The diagram on the top of the page shows the votes for all the images.

This result may have captured the bias in human thinking. Women, especially young women have been employed in front-desk operations and as such people associate them with being approachable. For instance, more than half (59 percent) of customer operations employees at Safaricom are women. It follows that AI systems built to support customer operations will pick up on these possible biases while humanising the bots. This may also explain why Safaricom's Zuri chat bot is personified as a young lady as well as Jubilee's Julie (see image below). Note the odd resemblance between Jubilee's Julie and Image A in the survey.

Safaricom's Zuri chatbot and Jubilee's Julie (left and right, respectively) each personified as a young lady.

Other customer assistance systems such as Apple's Siri, Google Assistant and Samsung Bixby use female voices. A study on the impact of gender differences on customer satisfaction by Ahmed Mansoora shows that gender difference has a significant impact on customer satisfaction, in some cases. In the documentary May Day: The Passenger Who Landed a Plane (2014), 77-year-old John Wildey was a passenger in a Cessna light aircraft when his friend, the pilot, died at the controls. John was stranded 1,500 feet up, in fading light, in a plane he didn't know how to fly. Air-traffic controllers talked him through how to land the plane but when he became nervous they asked a female pilot to give the instructions. He landed the plane safely and it has been thought that the female voice contributed to the success.

The least likely face

In the flip of the question, the survey asked which person a respondent is least likely to ask for help. Out of the 150 respondents, more than half (52 percent) picked image E and a quarter image F. The diagram below shows how the faces performed.

The main reasons given by respondents on the two most unlikely people to ask for help are that their faces look arrogant, unapproachable, unfriendly and snobbish. The word cloud below shows all the reasons provided for avoiding a character.

Word cloud showing reasons respondents gave as to why they would or would not approach persons A to F.

When AI customer agents get personality characters, they are least likely be a middle-aged man in a suit, or a young bearded man.

It might be worth knowing that the highest voted photo (Image A) is not a customer care agent - the person is Ms Catherine Gitau, a Data Scientist at Africa's Talking.

The author is a data scientist. @blackorwa